More than 70 percent of the Earth’s surface is covered by water, yet scientists know more about space than about what happens in the ocean. One way scientists are trying to improve their understanding of the marine environment is through the use of autonomous underwater vehicles (AUVs), programmable robotic vehicles that can independently study the ocean and its inhabitants.

But data collected by AUVs takes time to analyze and interpret, and scientists often lose the ability to use this critical information in real-time.

Mark Moline, director of the School of Marine Science and Policy in the University of Delaware’s College of Earth, Ocean, and Environment, recently co-authored a paper in Robotics on the advantage of linking multi-sensor systems aboard an AUV to enable the vehicle to synthesize sound data in real-time so that it can independently make decisions about what action to take next.

The idea occurred to Moline and Kelly Benoit-Bird, a colleague at Oregon State University who co-authored the paper, while they were conducting large-scale distribution studies of marine organisms in the Tongue of the Ocean, a deep ocean trench that separates the Andros and New Providence islands in the Bahamas.

Funded through the Office of Naval Research, Moline and Benoit-Bird were investigating whether food sources such as fish, krill and squid play a role in attracting whales to the region.

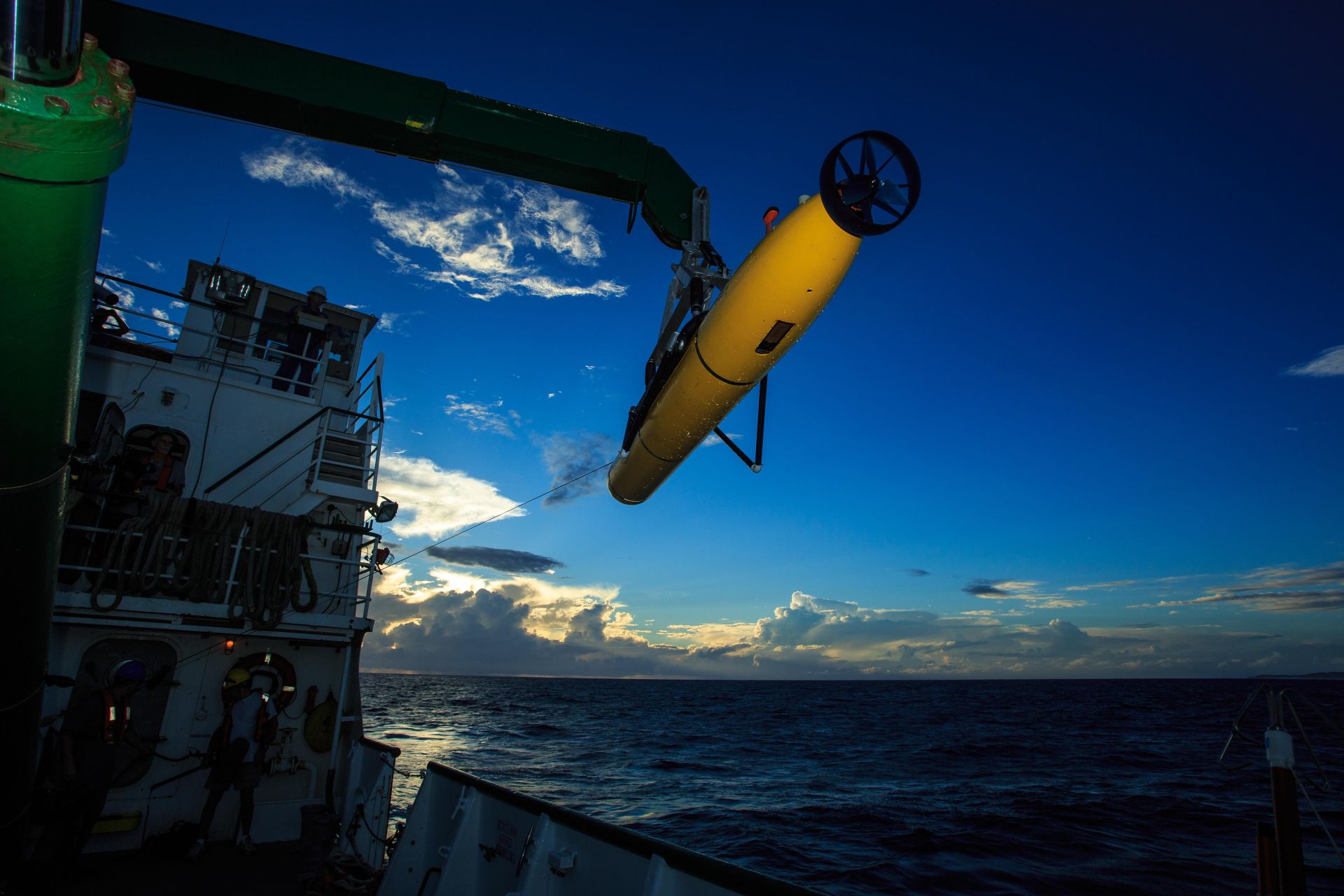

While there, the researchers decided to run a simple experiment to test whether a modular AUV used for deep sea research called a REMUS600 could be programmed to autonomously make decisions and trigger new missions based on biological information – such as a certain size or concentration of squid – in its environment.

“We knew the vehicle had more capabilities than we previously had applied,” said Moline, an early adopter of using robotics technologies in research and co-founder of UD’s Robotic Discovery Laboratory.

To accomplish this task is more difficult than one might think. For one thing, the ocean is a dynamic environment that is always changing and moving. Similarly, marine organisms like squid are in constant motion, being pushed around by currents, migrating, swimming and changing their behavior.

“What you see at any given instance is going to change a moment later,” Moline said.

The researchers pre-programmed the computers onboard the REMUS to make certain decisions. While surveying the ocean 1,640 to 3,000 feet below the surface, the onboard computers were analyzing the sonar data of marine organisms in the water based on size and density.

When acoustic sensors aboard the vehicle detected the right size and concentration of squid, it triggered a second mission: to report the robot’s position in the water and then run a preprogrammed grid to map the area in finer detail.

The higher-level scan revealed a very concentrated collection of squid in one area and a second less tightly woven mass of similarly sized squid as the scan moved north to south. According to Moline, these are details that might have been missed if the REMUS was only programmed to keep traveling on a straight line.

“It was a really simple test that demonstrated that it’s possible to use acoustics to find a species, to have an AUV target specific sizes of that species, and to follow the species, all without having to retrieve and reprogram the vehicle to hunt for something that will probably be long gone by the time you are ready,” he said.

The researchers would also like to know how squid and other prey are horizontally distributed in the water column, and how these distributions change based on oceanographic conditions or the presence or absence of predators, such as whales.

Combining available robotics technologies to explore the water in this way can help fill information gaps and may illuminate scales of prey distribution that scientists don’t know exist.

“Imagine what else could we learn if the vehicle was constantly triggering new missions based on real-time information?” Moline said.

With multiple decision loops, he continued, an AUV could follow an entire school of squid or other marine life, see where they went, and create a continuous roadmap of the prey’s travel through the ocean. It’s an exciting idea that has the potential to reveal new details about how prey move and behave – does the group break up into multiple schools, do they scatter or congregate even tighter over time – and if so, what affects these changes?

Another option is programming the AUV to trigger a deeper look only if it sees something specific, like a certain species or a combination of specific predators and prey.

“This is just the beginning,” Moline said.